Web Crawler is a open source application that is based on the WebEngine library. WebEngine is the set of tools for performing black-box web-sites testing and other similar tasks. It provides tools means for reception of documents from a web-server, parse HTML pages, their comparisons, search through pages sources and DOM-structure, sandboxed execution of the java-scripts and vb-scripts. Tools for authorized access to web-servers are provided, with different authorization mechanisms. Some formal checks can be performed by embedded checking engine without creation of the new machine code.

So basically, Web Crawler is a utility designed for testing and demonstration of the WebEngine open source library features. It gathers information about the resources of a specified web server by analyzing references in the HTML markup, text, and JavaScript code. Additionally, a query is sent to the Web Of Trust knowledge base to obtain information about the analyzed site. This check demonstrates analysis of web application vulnerabilities. Though Web Crawler is not a full-fledged web application security analyzer, it can be used to perform a bit of reconnaissance about the application in question.

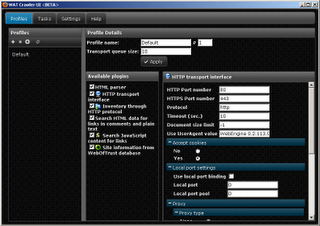

This is a screen shot of the application:

The main features provided by the application are listed below:

So basically, Web Crawler is a utility designed for testing and demonstration of the WebEngine open source library features. It gathers information about the resources of a specified web server by analyzing references in the HTML markup, text, and JavaScript code. Additionally, a query is sent to the Web Of Trust knowledge base to obtain information about the analyzed site. This check demonstrates analysis of web application vulnerabilities. Though Web Crawler is not a full-fledged web application security analyzer, it can be used to perform a bit of reconnaissance about the application in question.

This is a screen shot of the application:

The main features provided by the application are listed below:

- JavaScript analysis aimed at receiving references with simulation of a DOM structure

- Support of the Basic, Digest, and NTLM authorization schemes

- Access to the contents of web servers via HTTP

- Operation via proxy servers with various authorization schemes

- A wide variety of options to describe the scan target (lists of scanned domains, restriction of scanning to a host, a domain, or a web server directory, etc.)

- Modular structure, which allows one to implement plug-ins

A very good feature offered by this tool is that you can implement your own plug-ins to improve the functionality of this application. The library is currently not meant for scanning of rogue and misbehaving HTTP servers. In these cases, correct and stable operation cannot be guaranteed.

Additionally, since Web Crawler is based on the WebEngine library, you can also use it via the command line. A sample command line usage could be:

Download Crawler v0.2 (Crawler_v0_2.zip) here.

Additionally, since Web Crawler is based on the WebEngine library, you can also use it via the command line. A sample command line usage could be:

1 | <span class="IL_AD" id="IL_AD2">crawler</span> --target www.example.com --profile 0 –name "First sample task" --result report.txt --output 2 |